Berkant Turan

ML Researcher @ IOL Lab at ZIB | PhD Candidate @ TU Berlin | Member @ Berlin Mathematical School

I am a third-year PhD candidate at the Institute of Mathematics, TU Berlin and a research associate at Zuse Institute Berlin (ZIB). Under the supervision of Prof. Sebastian Pokutta, I am currently part of the Interactive Optimization and Learning (IOL) research group at ZIB. Additionally, I am a member of the Berlin Mathematical School (BMS), which is part of the Math+ Excellence Cluster.

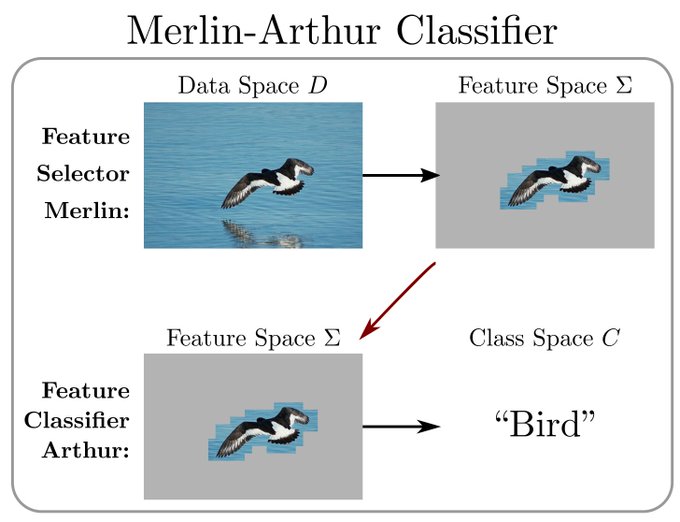

My research interests focus on the interpretability, robustness, and safe deployment of neural networks in high-stakes applications. I develop interactive, multi-agent models that provide provable insights into the decision-making processes of black-box systems. By utilizing feature selectors within an adversarial framework, I aim to expose the reasoning behind model predictions, addressing core challenges in the interpretability and security of complex AI systems.

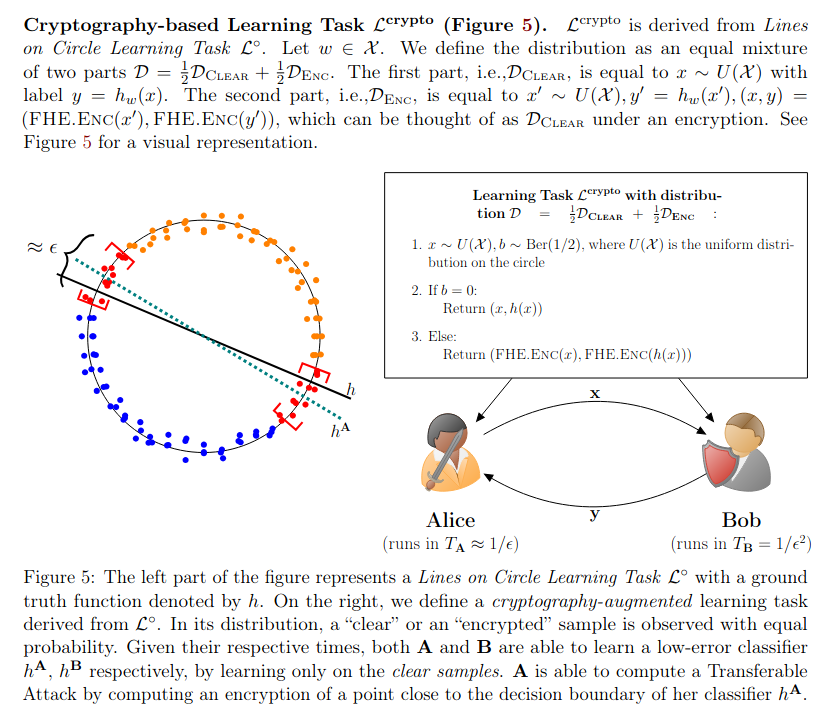

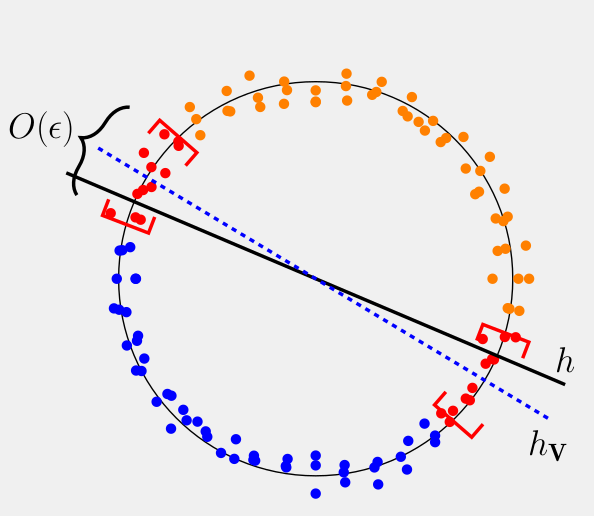

Additionally, I am interested in the interconnections between model security approaches, such as adversarial robustness and backdoor-based watermarks, and the theoretical limits tied to these techniques in different learning tasks. My work also investigates transferable attacks, which exploit vulnerabilities across multiple defenses using cryptographic tools, uncovering fundamental links between AI security and cryptography.

Before starting my PhD, I focused on Deep Hybrid Discriminative-Generative Modeling, investigating the optimization and behavior of Variational Autoencoders and Residual Networks for out-of-distribution detection, robustness, and calibration in computer vision tasks.

news

| 06/2025 | Happy to share that Neural Concept Verifier: Scaling Prover-Verifier Games Via Concept Encodings has been accepted at ICML 2025 Workshop on Actionable Interpretability! Grateful to collaborate with S. Asadulla, D. Steinmann, W. Stammer, and S. Pokutta on advancing interpretable AI through formal vericfiation methods. |

|---|---|

| 05/2025 | Excited to announce that our collaborative work on Capturing Temporal Dynamics in Large-Scale Canopy Tree Height Estimation has been accepted at ICML 2025! Many thanks to my amazing collaborators J. Pauls, M. Zimmer, S. Saatchi, P. Ciais, S. Pokutta, and F. Gieseke for this interdisciplinary project bridging machine learning and environmental science. |

| 03/2025 | Great news! The Good, the Bad and the Ugly: Watermarks, Transferable Attacks and Adversarial Defenses has been accepted at ICLR 2025 Workshop on GenAI Watermarking. |

| 10/2024 | Excited to announce that The Good, the Bad and the Ugly: Watermarks, Transferable Attacks and Adversarial Defenses is now available on arXiv! Many thanks to my collaborators, Grzegorz Głuch (EPFL at the time), Sai Ganesh Nagarajan (ZIB) and Sebastian Pokutta (ZIB), for their contributions to this project! |

| 06/2024 | Unified Taxonomy of AI Safety: Watermarks, Adversarial Defenses and Transferable Attacks got accepted at ICML 2024 Workshop on Theoretical Foundations of Foundation Models. See you in Vienna! |

| 03/2024 | Our recent paper, Interpretability Guarantees with Merlin-Arthur Classifiers, has been accepted at AISTATS 2024. Looking forward to meeting you in Valencia. |

| 07/2023 | I received the Best Proposal Award at the xAI-2023 Doctoral Consortium in Lisbon for my research on Extending Merlin-Arthur Classifiers for Improved Interpretability. Thank you to the reviewers and organizers! |

| 09/2022 | Excited to have started my PhD at TU Berlin and the Zuse Institute Berlin in the Interactive Optimization and Learning research lab, under the supervision of Sebastian Pokutta. |