Berkant Turan

ML Researcher @ IOL Lab at ZIB | PhD Candidate @ TU Berlin | Member @ Berlin Mathematical School

I am a fourth-year PhD candidate at the Institute of Mathematics, TU Berlin and a research associate at Zuse Institute Berlin (ZIB). Under the supervision of Prof. Sebastian Pokutta, I am currently part of the Interactive Optimization and Learning (IOL) research group at ZIB. Additionally, I am a member of the Berlin Mathematical School (BMS), which is part of the Math+ Excellence Cluster.

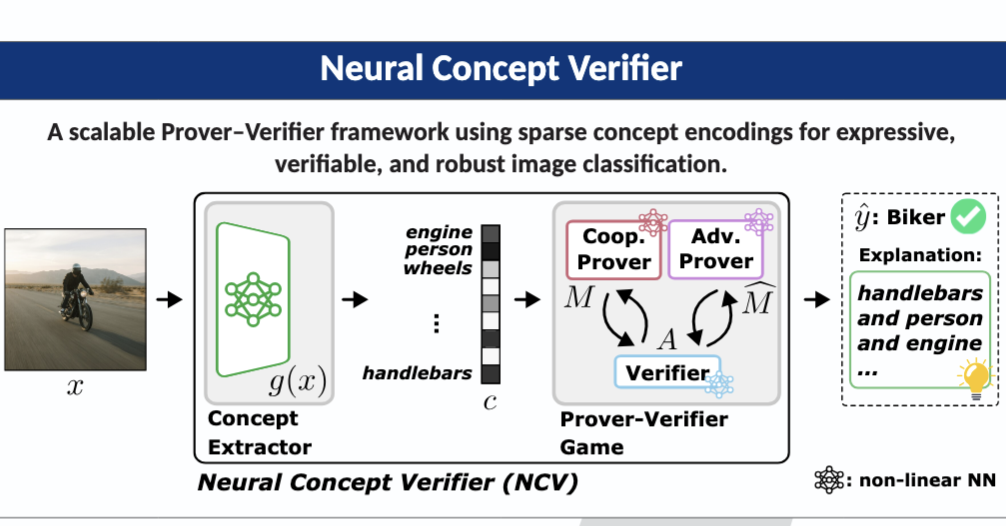

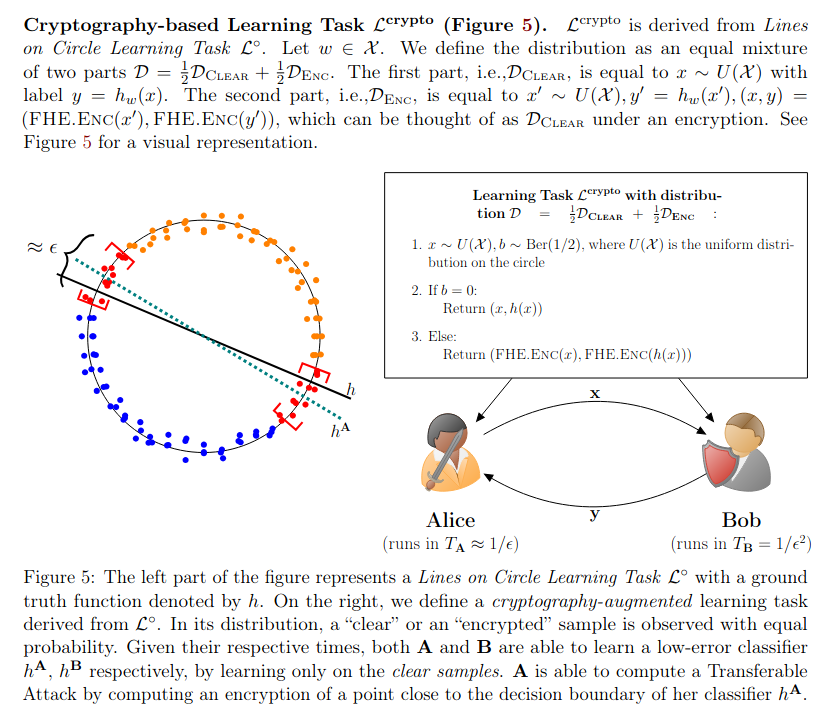

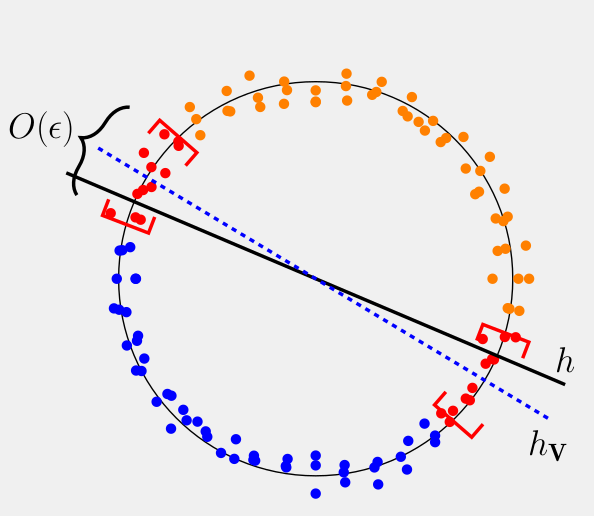

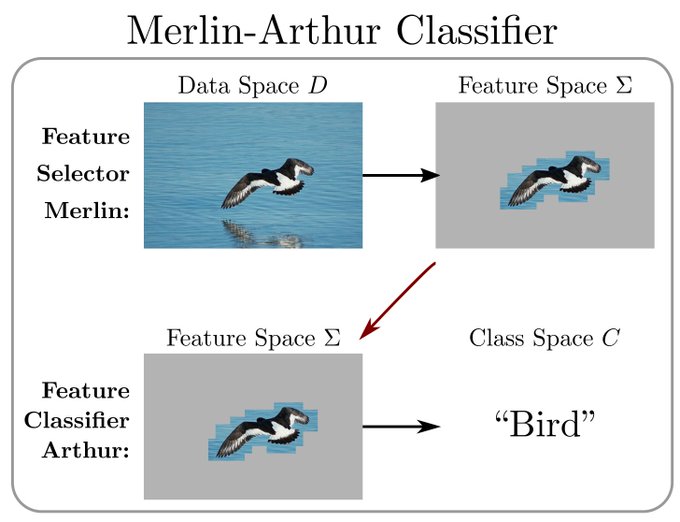

My research is centered on Prover-Verifier Games for Trustworthy Machine Learning. I develop interactive, multi-agent models (such as Merlin-Arthur classifiers and concept-based verifiers) that provide provable interpretability guarantees: the evidence used for a prediction can be checked and linked formally to the model’s decision. I am also interested in the theoretical limits of model security—including adversarial robustness, backdoor-based watermarks, and transferable attacks—and their connections to cryptography.

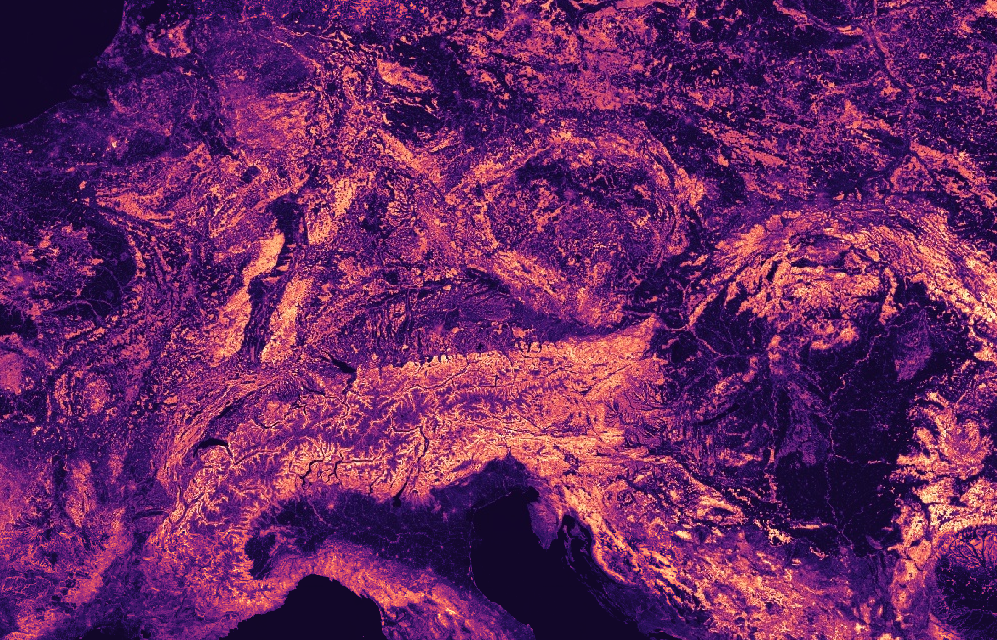

A second line of my work is AI-based methods for Earth observation, in particular high-resolution forest monitoring. As part of the AI4Forest project at ZIB, I develop scalable ML pipelines for canopy height estimation and biomass mapping from satellite data (e.g. Sentinel-1/2 and GEDI), supporting large-scale ecological monitoring and climate research.

Before starting my PhD, I worked on Deep Hybrid Discriminative-Generative Modeling, investigating Variational Autoencoders and Residual Networks for out-of-distribution detection, robustness, and calibration in computer vision.

news

| 09/2025 | Thrilled to announce that The Good, the Bad and the Ugly: Meta-Analysis of Watermarks, Transferable Attacks and Adversarial Defenses has been accepted at NeurIPS 2025! Huge thanks to my collaborators Grzegorz Głuch, Sai Ganesh Nagarajan, and Sebastian Pokutta. |

|---|---|

| 06/2025 | Happy to share that Neural Concept Verifier: Scaling Prover-Verifier Games Via Concept Encodings has been accepted at ICML 2025 Workshop on Actionable Interpretability! Grateful to collaborate with S. Asadulla, D. Steinmann, K. Kersting, W. Stammer, and S. Pokutta on advancing interpretable AI through formal verification methods. |

| 05/2025 | Excited to announce that our collaborative work on Capturing Temporal Dynamics in Large-Scale Canopy Tree Height Estimation has been accepted at ICML 2025! Many thanks to my amazing collaborators J. Pauls, M. Zimmer, S. Saatchi, P. Ciais, S. Pokutta, and F. Gieseke for this interdisciplinary project bridging machine learning and environmental science. |

| 03/2025 | Great news! The Good, the Bad and the Ugly: Watermarks, Transferable Attacks and Adversarial Defenses has been accepted at ICLR 2025 Workshop on GenAI Watermarking. |

| 10/2024 | Excited to announce that The Good, the Bad and the Ugly: Watermarks, Transferable Attacks and Adversarial Defenses is now available on arXiv! Many thanks to my collaborators, Grzegorz Głuch (EPFL at the time), Sai Ganesh Nagarajan (ZIB) and Sebastian Pokutta (ZIB), for their contributions to this project! |